Ethics of Autonomous Vehicles: beyond the trolley dilemma

This is a short commentary I wrote in 2017, on Patrick Lin’s “Why Ethics Matters for Autonomous Cars”.

The book Autonomous Driving formulates a set of use cases that serve as a reference point for discussing the technical, legal and social aspects of autonomous driving (Wachenfeld et al. 2016). In addition, Lin sketches scenarios that require ethical choices to be made by autonomous vehicles (AVs). An advantage of this approach is that these scenarios can take the form of thought experiments, which are not obstructed by the fact that as of yet no fully AVs are in use: they nevertheless draw out moral intuitions that are in turn useful for helping us formulate how we expect robots to react in similar situations (Malle 2016, 250). One classical thought experiment in particular, the trolley dilemma, seems to experience a revival in the context of AVs (Lin 2016, Contissa, Lagioia, and Sartor 2017, Nyholm and Smids 2016). However, despite its popularity, I argue that ethical questions concerning AVs go beyond the scope of the original trolley dilemma.

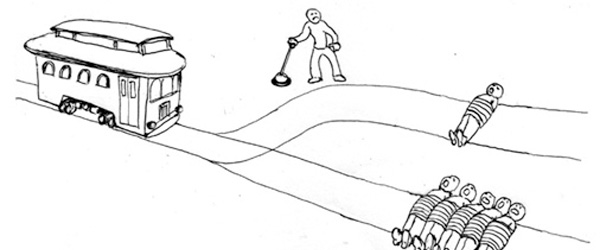

In its classical form, the trolley problem situates an observer near a switch, overlooking a trolley on its way to kill five unsuspecting people working on the tracks. However, using the switch would divert the trolley to kill only one person. The question then is: is it correct for the observant to pull the switch? It is easy to imagine such a trolley problem for AVs, where the “switching” decision has to be made by the AV, and indeed Lin does so (Lin 2016, 78-79). However, whereas usually trolley problems concern the question what is the right choice, in the case of AVs it also includes the underlying question who made the choice and is correspondingly responsible for it.

Two cases sketched in Autonomous Driving, let’s say A and B, are particularly interesting in this regard. Case A concerns fully automated driving with the extension of a human driver that is able to take over drive control at any moment. In Case B, the driving task is performed completely independent of the passenger, which also entails that the passenger cannot take over driving control (Wachenfeld et al. 2016, 19). In case A, the trolley problem arguably maintains its original form: when a human is able to take over control, the switching decision also remains the responsibility of the human driver, but only as long as we ignore the practical issue that the handing over of control to the human driver is unlikely to be fast enough (Lin 2016, 71). In case B however, it cannot in any case simply be the human driver that is responsible for the life-death decision, and thus case B extends beyond the scope of the original trolley problem.

The AV would make such life-death decisions based on programmed algorithms and cost functions. I would say that therefore the real ethical decisions are no longer made split-second, as in the trolley problem, but instead are moved to the design stage where such time-constraints do not apply (Nyholm and Smids 2016, 1280-2). A particular difference with the trolley problem thus is that responsibility for possible deaths is distributed over a set of stakeholders. The possible answer to the question who is to blame has large consequences for example for producers of AVs (vulnerability to lawsuits) or insurance companies (dealing with damage claims). I agree with Lin that regardless of the answer, transparency of the decision-making should be central to AV ethics (Lin 2016, 79).

In that sense, one recent suggestion is particularly interesting as an addition to Lin’s deliberations in the context of the defined use cases. In case B, a way to again involve the user in the design process is to use an ‘ethical knob’ that makes the preference for the survival of passengers or third parties explicit in moral modes ranging from altruistic, to impartial and egoistic (Contissa, Lagioia, and Sartor 2017). These modes determine the “autonomous” decision-making of the AV, but are deliberately set by the user. In this manner, the moral load of using the AV becomes transparent to the user, which in turn may be a decisive factor for quantifying guilt in case of lawsuits and insurance claims. To conclude, in designing AVs robot ethics and machine morality can be connected by fine-tuning the moral competences AVs have with respect to a dynamic set of situations and preferences (cf. Malle 2016).

672 words.

N.B. click here for an alternative solution to the trolley problem.

References ¶

Contissa, Giuseppe, Francesca Lagioia, and Giovanni Sartor. 2017. “The Ethical Knob: ethically-customisable automated vehicles and the law.” Artificial Intelligence and Law 25 (3):365-378. doi: 10.1007/s10506-017-9211-z.

Lin, Patrick. 2016. “Why Ethics Matters for Autonomous Cars.” In Autonomous Driving, edited by Markus Maurer, J. Christian Gerdes, Barbara Lenz and Hermann Winner, 69-82. Springer-Verlag Berlin Heidelberg.

Malle, Bertram F. 2016. “Integrating robot ethics and machine morality: the study and design of moral competence in robots.” Ethics and Information Technology 18 (4):243-256. doi: 10.1007/s10676-015-9367-8.

Nyholm, Sven, and Jilles Smids. 2016. “The Ethics of Accident-Algorithms for Self-Driving Cars: an Applied Trolley Problem?” Ethical Theory and Moral Practice 19 (5):1275-1289. doi: 10.1007/s10677-016-9745-2.

Wachenfeld, Walther, Hermann Winner, J. Christian Gerdes, Barbara Lenz, Markus Maurer, Sven Beiker, Eva Faedrich, and Thomas Winkle. 2016. “Use Cases for Autonomous Driving.” In Autonomous Driving, edited by Markus Maurer, J. Christian Gerdes, Barbara Lenz and Hermann Winner, 9-38. Springer-Verlag Berlin Heidelberg.