- A. Moving resource blocks within the same Terraform state, for example to a child module.

- B. Moving a resource block to another module altogether, for example because over time you have developed dependency cycles and refactoring is overdue.

- C. Removing a resource from Terraform management altogether without deleting the resource.

- D. Bring an existing resource under Terraform management without Terraform trying to create a resource that already exists

- Make the refactor in your IaC

- In a Terraform plan, observe which resources are deleted and then recreated at the new path

- For each resource, define a

movedblock.- You can do this in a separate file, or keep the

movedblocks next to the moved resource.

- You can do this in a separate file, or keep the

- Verify that in the final Terraform plan there are no creations and deletions. If there are in-place updates, verify that they are intended.

- For example, if you accidentally import the right resource but from the wrong subscription (for example your staging environment instead of your dev environment) this may show up as an in-place update, depending on if that particular field forces replacement for that given resource.

- Remove the resource from the IaC of module A

- Record this removal with a

removedblock (available since Terraform 1.7+ ) - Prior to Terraform 1.7, this requires you to manually run

terraform state rm - Include the resource in the IaC of module B

- Record an

importblock that maps the existing resource to the new location block that maps the existing resource to the new location - Ensure that the Terraform plan for both modules do not include deletions and creations. Carefully inspect possible in-place updates and ensure they are innocent.

- An innocent example of an in-place update is capitalization in a resource group name if the resource name is case insensitive.

- Migrate a resource from A to B

- Migrate a resource from B to A

- The

mainbranch is the single source of truth, do not deploy any infrastructure (except for your dev environment) from other branches. Enforce this in your deployment pipelines. - In staging and production environments, nobody should be able to manually deploy infrastructure using a

terraform apply, but nevertheless you may have forgotten to deactivate an older deployment pipeline that can apply changes and may automatically trigger. If the previous safeguard is in place you should nevertheless only be able to deploy from the main branch. - Exploratory notebooks should have a single author who is the owner of the analysis

- A minimal version control approach is possible: others do not push changes in that notebook, unless explicitly coordinated with the owner of the notebook.

- This should avoid having to review unreadable diffs

- And also avoid merge conflicts altogether

- Mention the date and the author name of the analysis in the file name to make the historical character and owner explicit

- Clear all cell outputs before committing a notebook to the repository

- This avoids cluttering Git diffs with anything other than code, although this will not prevent changes in metadata to be pushed.

- If notebook output has to be put under version control, convert the notebook to html (using “save as” or

nbconvert) and commit the html instead.

- Store notebooks apart from the main source code of a project. I personally use a dedicated

notebooksfolder.- This also allows you to more easily trigger a separate workflow for notebooks (see below).

- This keeps the main source code of a project uncluttered with dated notebooks.

- When you need to diff a notebook, export to Python using

jupyter nbconvertand diff the Python file instead. - When you need output, use

nbconvertto execute the notebook and convert the output to html - Before committing a notebook, clear the outputs to minimize unreadable blobs

- Install as standalone command using pip

pip install jupytext --upgrade- Handy! Can be included in requirements.txt

- Install as Jupyter notebook extension

jupyter nbextension install --py jupytext [--user]- Follow by:

jupyter nbextension enable --py jupytext [--user] - Notebook: File -> Jupytext -> Pair Notebook with light (fewer markers) or percent (explicit cell delimiters) script

- If you now save the notebook, a corresponding .py version will be updated automatically!

- Install as Jupyter lab extension

jupyter labextension install jupyterlab-jupytext- Lab: View -> Activate Command Palette (Ctrl+Shift+C) -> Pair Notebook with …

- If you now save the notebook, a corresponding .py version will be updated automatically!

Idiot's guide to resource migration in Terraform

Sometimes we need to migrate resources that are managed in Terraform. Terraform is a declarative language to manage cloud infrastructure from code, which allows you to reliably automate your deployments and put your infrastructure configuration under version control. We call this Infrastructure as Code (IaC).

When moving resources in your IaC, Terraform will by default delete the resource and re-create it at the new path. In the best case this is relatively harmless, depending on whether some downtime is acceptable, but nevertheless wasted effort because we already know the recreated resource will be identical to the removed resource. Worst case, we absolutely do not want to delete and recreate the resource, for example because it holds data (think: storage accounts, databases). Another reason you do not want to recreate resources is if they use on system-assigned identities for connecting to other resources (role-based access that is tied to the lifecycle of the resource itself). In that case, recreating the resource will likely break functionality.

So how do we properly migrate cloud resources managed by Terraform? Properly means that Terraform will update its pointer to the remote resource and that the remote resource itself remains unaffected. There are various scenarios in which you need to migrate Terraform resources:

Scenario A and B are the most interesting scenarios. In particular scenario B is a superset of scenarios C and D, so they are discussed in one go.

Scenario A: moving resources within the same Terraform state ¶

After moving, it is optional to keep the move statements around.

I tend to keep them around until everything has been working great for a while, because the moved blocks are essentially an explicit history of how you manipulated the Terraform state.

Scenario B: moving a resource to another Terraform state ¶

This scenario is a lot more tricky! This is because a failure to correctly remove blocks from the Terraform state will trigger deletion of those resources.

Always carefully inspect your plans before applying anything.

In this example, we will move one resource from module A to module B. Module A and B each have their own backend and Terraform state.

What can go wrong? ¶

Importing without removing ¶

A lot of extra complexity arises from the fact that during your refactor, your teammates will probably happily keep on working in another branch. During the migration, it is absolutely necessary to communicate this with teammates and do not make any other infra changes in the same Terraform states in the meantime.

We have to deploy module A first because it contains fundamental resources (such as networking: VNets, subnets, endpoints) that all subsequent modules depend on.

So the deployment order is: A -> B

Let’s consider two scenarios.

Let’s say you migrate a resource from module A to B.

First you remove the resource block from the infrastructure as code.

If you forget to correctly remove the resource from the Terraform state with a removed block in A, applying the Terraform plan will delete the resource and then re-create it at B.

In this case you have de facto migrated, but if your resources contains data you have just messed up.

Let’s say you migrate a resource from module B to A.

If you forget to make and inspect a Terraform plan for all involved modules and find out too late that your removed blocks from the Terraform state of B contain mistakes, you wil happily delete the resources that you have just imported in the state of A.

This scenario is even more nasty, because at the end of the pipeline you have not only possibly thrown away data or identities tied to that specific resources, but also end up without that resource altogether.

This requires manual intervention to fix.

Do not apply IaC from different branches ¶

When you are migrating resources from a refactoring branch, it’s very important to communicate with your teammates when the actual migration starts in order to ensure nobody manipulates the Terraform states from another branch with different IaC.

Some important safeguards should be in place:

If these guardrails are in place, you can only mess up on the development environment. For the sake of argument, let’s think through what happens if multiple people apply different IaC on the development environment.

This is Hank.

Hank was living the good life and followed this migration guide.

In the A -> B scenario he is doing a dry run of his migration by applying the infra changes on the development environment before merging his IaC to the main branch and committing to the whole migration.

But now he found out that someone he thought he knew well is not who he seemed to be. That persons is applying old IaC to the development environment and on top of that, is breaking bad completely because they didn’t even check the Terraform plan before applying. Now Hank is worried and wondering why his database disappeared on dev.

What happened?

Hank migrated a database from Terraform state B to state A on his development branch. During his testing run on dev, he rolled out these changes. Our bad guy rolls out the old IaC from another branch. Terraform notices that there is a database in state A that is not in the IaC, so will happily generate a plan to throw away the database.

An optional guardrail is to use data blocks for resources that actually contain data, such as databases or critical storage accounts.

It depends really on whether you want to live dangerously or not:

For believe me: the secret for harvesting from existence the greatest fruitfulness and greatest enjoyment is —- to live dangerously. - Friedrich Nietzsche

Disaster recovery ¶

Before doing a migration, make a backup of your terraform states.

You can use terraform state pull for this.

What do you do when things go wrong anyways? At this point, you have to accept shit is FUBAR and that you have to make manual interventions without making further mistakes under stress.

Honestly, this is context dependent, but it’s not as simple as just reverting the IaC changes and restoring the old Terraform state (you could use terraform state push, which has some safeguards built-in).

If things went bad, your infrastructure has probably changed so your old Terraform states are outdated.

If it’s possible to recover deleted or changed infrastructure (e.g. if you have soft deletes and recovery procedures) to exactly match the old IaC, you could try to revert IaC changes and restore the old state to reach a point where you end up with a neutral Terraform plan.

The downside? Not only have you possibly thrown away data, you are back to square one with the migration.

Instead, I’d personally adopt a “fix forward” strategy: accept you messed up, make sure all resources are rolled out like they were supposed to, and make ad-hoc changes to the Terraform states using imports and removals.

Some Terraform gotchas (as of March 2024) ¶

To conclude, some gotchas I encountered during a migration I performed recently.

Import blocks still do not support the count keyword, but since recently they do support for_each.

Resources created with a count parameter are indexed with an integer value, like azurerm_storage_account.storage_account[0].

This index must be numerical and cannot be a string.

If you have a high count (let’s say 100) or the amount of resources is non-static because it depends on a variable that’s different for each deployment environment, you do not want to manually type 100 import blocks.

What you want to do instead is create a list or mapping that you can pass to for_each such that you can create a numerical range.

You can pass a list to for_each, but this will implicitly create a map with identical keys and values.

Be careful with Terraform map objects, because the key is always a string even if you try to cast it to a number.

You will end up with azurerm_storage_account.storage_account["0"] and this will not work for obvious reasons.

Instead, just use the range function and use each.value.

Also don’t forget to update to the latest Terraform version, because the for_each argument to import and removed blocks are only available since 1.7.

Related notes:

Self portraits using stable diffusion

I was in need of a head shot, but I don’t like taking pictures. Being a programmer I figured, why not let AI magically turn a messy selfie into a proper professional head shot? As it turns out, there’s quite a market for AI tools that generate professional portraits that are suitable for LinkedIn and such, but they were requesting fees I wasn’t willing to pay. I hoped to do some experiments on a Midjourney trial license instead, but they discontinued those. No problemo, this is how this post was conceived: let’s see how far we get with a pretrained stable diffusion model without further fine-tuning on my face.

I used CompVis/stable-diffusion-v1-4, which was initially trained on 256x256 images from laion-aesthetic data sets and further fine-tuned on 512x512 images. This is a text-to-image model trained to generate images based on a text prompt describing the scene, but it’s also possible to initialize the inference process with a reference picture. It’s possible to tweak the relative strength of the reference image and the “hallucination” of the model based on the prompt. I played around with two selfies that I cropped to a 1:1 ratio and then resized to 512x512. The first reference picture was a selfie with my huge Monstera in the background. The “head and shoulders” setup is also quite common for portraits so I expected this layout to be well represented in the training data.

It’s not hard to generate stunning pictures if you let the model hallucinate. The top two pictures are very loosely inspired by the reference picture (the green from the Monstera subtly returns in the background; heavy mustache) but don’t look like me at all. The next two pictures attribute more importance to the reference picture and stay close to the original layout of the scene and main characteristics of my face (mainly my hair and beard style), but other than that didn’t look like me at all. My main observation at this point is that …

trying to stay close to a reference portrait without fine-tuning on the subject is a one-way ticket to the uncanny valley.

I was initially sceptical, but by now I’m convinced that using generative AI in this manner to create a specific picture you have in mind truly is a creative process. It is similar to how a professional photographer sets up the environment for the right snapshot: the art is not in the pressing of the button, but on using the tools available to materialize something that only existed in your mind’s eye so far. Similarly, prompt engineering is an art in the sense that over time you start to get a feeling for which prompts are successful for manipulating this stochastic “obscene hallucination machine” in a desired direction.

Only after including the names of painters and artists I started seeing results that I liked. For example, I added jugendstil artist Alphone Mucha to the prompt. These results are obviously not “realistic,” but in for example the top left picture I started recognizing a bit of myself in the glance of the eyes. The style worked really well with the Monstera in the background.

I tend to be compared to Vincent van Gogh and you can probably see why in the final results. These are based on a different picture that I sent to my better half when I was, quite frankly, done with my day. When I threw Vincent van Gogh and Edvard Munch in the mix, I finally managed to produce a stylized version of a picture that actually looked like me. Lo and behold, the top left picture is a stylized and “painted” version of what I actually look like. Everyone that knows me will immediately recognize me in this picture.

To achieve the goal of getting a professional looking head shot, the next step is to finetune a stable diffusion model on pictures of my own face. This should make it possible to let the model do its magic on the background and the styling, but stay closer to my actual face.

My Vinyl Record Collection

I added a digital vinyl record display to this website, have a look!

The idea was to digitally reproduce one of those fancy wall mounts for displaying records in your room. You can click on each record to see some basic information.

All vinyl records I physically own are registered on Discogs. I used the Discogs API to automatically retrieve my collection, parse the information I want, and store all results in Markdown files that can be consumed on this website.

If you like what you see, I open sourced my code to reproduce these results so you can make your own digital wall too. The project’s README explains how to run the project using your own Discogs collection. The only thing you need is a Discogs user name, Python, and optionally an authentication token to get links to cover images. Also note that Discogs throttles requests per minute, so check out my tip on deployment too.

If you like this project, give it a star on Github and share a link to your digital record collection. I’d love to see it!

Version control on notebooks using pre-commit and Jupytext

Notebooks have a place and a time. They are suitable for sharing the insights of an exploratory data analysis, but not so convenient for collaborating with multiple people whilst having the notebook code under version control. Generally speaking notebooks do not promote good coding habits, for example because people tend to duplicate code by copying cells. People typically also don’t use supportive tooling such as code linters and type checkers. But one of the most nasty problems with notebooks is that version control is hard, for several reasons. Running a diff on notebooks simply sucks. The piece of text we care about when reviewing - the code - is hidden in a JSON-style data structure that is interleaved with base-64 encoded blobs for images or binary cell outputs. Particularly these blobs will clutter up your diffs and make it very hard to review code. So how can we use notebooks with standard versioning tools such as Git? This post explains an automated workflow for good version control on Jupyter and Jupyter Lab notebooks using Git, the pre-commit framework, and Jupytext. The final pre-commit configuration can be found here.

Which type of notebooks need a version control workflow? ¶

But let’s first take a step back. Whether we actually need version control depends on what the purpose of the notebook is. We can roughly distinguish two types of notebooks.

Firstly, we have exploratory notebooks that can be used for experimentation, early development, and exploration. This type of notebook should be treated as a historical and dated (possibly outdated!) record of some analysis that provided insights at some moment in a project. In this sense the notebook code does not have to be up to date with the latest changes. For this type of “lab” notebook, most difficulties concerning version control can be avoided by following these recommendations:

Secondly, in some workflows one may want to collaborate with multiple people on the same notebook or even have notebooks in production (looking at you, Databricks). In these cases, notebooks store the most up-to-date and polished up output of some analysis that can be presented to stakeholders. But the most notable difference is that this type of notebook is the responsibility of the whole data science team. As a result, the notebook workflow should support more advanced version control, such as the code review process via pull requests and handling merge conflicts. The rest of this post explains such a workflow.

General recommendations ¶

To start, I make these general recommendations for all notebooks that are committed to a Git repository:

Motivation of the chose workflow ¶

The core idea of this workflow is to convert notebooks to regular scripts and use these to create meaningful diffs for reviewing. This basic workflow could work something like this:

To convert to HTML: jupyter nbconvert /notebooks/example.ipnb --output-dir="/results" --output="example.html".

To convert to Python: jupyter nbconvert /notebooks/example.ipnb --to="python" --output-dir="/results" --output="example" or the newer syntax jupyter nbconvert --to script notebook.ipynb.

Notice the absence of the .py extension if you specify a to argument.

Multiple notebooks can be converted using a wildcard.

If you want to have executed notebooks in your production environment, you can 1) commit the Python version of the notebook and 2) execute the notebook with a call to nbconvert in a pipeline, such as Github Actions (

example GitHub Actions workflow).

This allows you to execute a notebook without opening the notebook in your browser.

You execute a notebook with cleared outputs as such:

jupyter nbconvert --to notebook --execute notebook_cleared.ipynb --output notebook_executed

However, a downside of nbconvert is that the conversion is uni-directional from notebook to script, so one cannot reconstruct the notebook after making changes in the generated script.

In other words, the corresponding script would be used strictly for reviewing purposes, but the notebook would stay the single source of truth.

The jupytext tool solves this problem by doing a bi-directional synchronization between a notebook and its corresponding script.

We should also note that this workflow requires several manual steps that can be easily forgotten and messed up.

It is possible to write post-save Jupyter hooks to automate these steps, but a limitation is that such a configuration would be user-specific.

It is also possible to use Git hooks, but these are project-specific and would require all team members to copy in the correct scripts in a project’s .git folder.

Instead, we’d like to describe a workflow that can be installed in a new project in a uniform manner, such that each team member is guaranteed to use the same workflow.

We’ll use the multi-language pre-commit framework to install a jupytext synchronization hook.

What is Jupytext? ¶

Jupytext is a tool for two-way synchronization between a Jupyter notebook and a paired script in

many languages.

In this post we only consider the .ipynb and .py extensions.

It can be used as a standalone command line tool or as a plugin for Jupyter Notebooks or Jupyter Lab.

To save information on notebook cells Jupytext either uses a minimalistic “light” encoding or a “percent” encoding (the default).

We will use the percent encoding.

The following subsections are applicable only if you want to be able to use Jupytext as a standalone tool.

If not, skip ahead to here.

Installation and pairing ¶

Basic usage ¶

When you save a paired notebook in Jupyter, both the .ipynb file and the script version are updated on disk.

On the command line, you can update paired notebooks using jupytext --sync notebook.ipynb or jupytext --sync notebook.py.

If you run this on a new script, Jupytext will encode the script and define cells using some basic rules (e.g. delimited by newlines), then convert it to a paired notebook.

When a paired notebook is opened or reloaded in Jupyter, the input cells are loaded from the text file, and combined with the output cells from the .ipynb file.

This also means that when you edit the .py file, you can update the notebook simply by reloading it.

You can specify a project specific configuration in jupytext.toml:

formats = "ipnb,py:percent"

To convert a notebook to a notebook without outputs, use jupytext --to notebook notebook.py .

Combining Jupytext with pre-commit ¶

Okay, so Jupytext handles the two-way synchronization between scripts and outputs, which is an improvement compared to Jupyter’s native nbconvert command.

The basic idea is still that when you want notebook code reviewed, collaborators can instead read and comment on the paired script.

It is now also possible to incorporate the feedback directly in the script if we wish to do so, because the notebook will be updated accordingly.

Our broader goal was to completely remove any need for manual steps. We will automate the synchronization step using a pre-commit hook, which means that we check the synchronization status before allowing work to be committed. This is a safeguard to avoid to avoid that out of sync notebooks and scripts are committed.

Git hooks are very handy, but they go in .git/hooks and are therefore not easily shared across projects.

The pre-commit package is designed as a multi-language package manager for pre-commit hooks and can be installed using pip: pip install pre-commit.

It allows you to specify pre-commit hooks in a declarative style and also manages dependencies, so if we declare a hook that uses Jupytext we do not even have to manually install Jupytext.

We declare the hooks in a configuration file.

We also automate the “clear output cells” step mentioned above using nbstripout.

My .pre-commit-config.yaml for syncing notebooks and their script version looks like this:

repos:

- repo: https://github.com/kynan/nbstripout

rev: 0.6.1

hooks:

- id: nbstripout

- repo: https://github.com/mwouts/jupytext

rev: v1.14.1

hooks:

- id: jupytext

name: jupytext

description: Runs jupytext on all notebooks and paired files

language: python

entry: jupytext --pre-commit-mode --set-formats "ipynb,py:percent"

require_serial: true

types_or: [jupyter, python]

files: ^notebooks/ # Only apply this under notebooks/

After setting up a config, you have to install the hook as a git hook: pre-commit install.

This clones Jupytext and sets up the git hook using it.

Now the defined commit wil run when you git commit and you have defined the hook in a language agnostic way!

In this configuration, you manually specify the rev which is the tag of the specifiek repo to clone from.

You can test if the hook runs by running it on a specific file or on all files with pre-commit run --all-files.

Explanation and possible issues ¶

There are some important details when using Jupytext as a pre-commit hook. The first gotcha is that when checking whether paired notebooks and scripts are in sync, it actually runs Jupytext and synchronizes paired scripts and notebooks. If the paired notebooks and scripts were out of sync, running the hook will thus introduce unstaged changes! These unstaged changes also need to be committed in Git before the hook passes and it is recognized that the files are in sync.

The second gotcha is that the --pre-commit-mode flag is important to avoid a subtle but very nasty loop.

The standard behavior of jupytext --sync is to see which two of the paired files (notebook or script) was most recently edited and has to be taken as the ground truth for the two way synchronization.

This is problematic because this causes a loop when used in the pre-commit framework.

For example, let’s say that we have a paired notebook and script and that we edit the script.

When we commit the changes in the script, the pre-commit hook will first run Jupytext.

In this case the script is the “source of truth” for the synchronization such that the notebook needs to be updated.

The Jupytext pre-commit hook check will fail because we now have unstaged changed in the updated notebook that we need to commit to Git.

When we commit the changes in the updated notebook, however, the notebook becomes the most recently edited file, such that Jupytext complains that it is now unclear whether the notebook or the script is the source of truth.

The good news is that Jupytext is smart enough to raise an error and requires you to manually specify which changes to keep.

The bad news is that in this specific case, this manual action does not prevent the loop: we’re in a Catch 22 of sorts!

The --pre-commit-mode fixes this nasty issue by making sure that Jupytext does not always consider the most recently edited file as the ground truth.

Within the pre-commit framework you almost certainly also want to specify other hooks.

For example, I want to make sure my code is PEP8 compliant by running flake8 or some other linter on the changes that are to be committed.

The pre-commit framework itself also offers hooks for fixing common code issues such as trailing whitespaces or left-over merge conflict markers.

But this is where I’ve encountered another nasty issue that prevented the Jupytext hook from correctly syncing.

Let’s take a hook that removes trailing white spaces as an example. This hook works as intended on Python scripts, but the hook does not actually remove trailing white spaces in the code because source code of notebook cells are encapsulated in a JSON field as follows:

{

"cell_type": "code",

"execution_count": null,

"id": "361b20cc",

"metadata": {

"gather": {

"logged": 1671533986727

},

"jupyter": {

"outputs_hidden": false,

"source_hidden": false

},

"nteract": {

"transient": {

"deleting": false

}

}

},

"outputs": [],

"source": [

"#### pre-processing\n",

"from src.preprocessing import preprocess_and_split\n",

"\n",

"df_indirect, df_direct, df_total = preprocess_and_split(df)"

]

}

This means that if you commit a notebook with trailing white spaces in the cells, the following happens and will prevent the paired notebook and script from ever syncing:

- The Jupytext hook synchronizes the notebook with a paired Python script.

- Trailing whitespaces are removed from the Python script and the plain text representation of the notebook (i.e. it removes trailing white space after the closing brackets)

- When translating the notebook to code or vice versa, one version still includes trailing white spaces in the code cells and the other not.

This is something to be wary of and needs to be solved on a case to case basis.

I have solved this specific issue by adding all notebooks in the notebooks folder as per my recommendation and then not running the trailing whitespace hook in the notebooks folder.

Putting it all together ¶

The following is a .pre-commit-config.yaml that synchronizes all notebooks with Python scripts under notebooks and plays nice with other pre-commit hooks:

# Install the pre-commit hooks below with

# 'pre-commit install'

# Run the hooks on all files with

# 'pre-commit run --all'

repos:

- repo: https://github.com/pre-commit/pre-commit-hooks

rev: v4.4.0

# See: https://pre-commit.com/hooks.html

hooks:

- id: trailing-whitespace

types: [python]

exclude: ^notebooks/ # Avoids Jupytext sync problems

- id: end-of-file-fixer

- id: check-merge-conflict

- id: check-added-large-files

args: ['--maxkb=5000']

- id: end-of-file-fixer

- id: mixed-line-ending

# Run flake8. Give warnings, but do not reject commits

- repo: https://github.com/PyCQA/flake8

rev: 6.0.0

hooks:

- id: flake8

args: [--exit-zero] # Do not reject commit because of linting

verbose: true

# Enforce that notebooks outputs are cleared

- repo: https://github.com/kynan/nbstripout

rev: 0.6.1

hooks:

- id: nbstripout

# Synchronize notebooks and paired script versions

- repo: https://github.com/mwouts/jupytext

rev: v1.14.1

hooks:

- id: jupytext

name: jupytext

description: Runs jupytext on all notebooks and paired files

language: python

entry: jupytext --pre-commit-mode --set-formats "ipynb,py:percent"

require_serial: true

types_or: [jupyter, python]

files: ^notebooks/ # Only apply this under notebooks/

The final workflow for version control on notebooks is as follows:

- Install Git pre-commit hooks in your project based on the declarative config using

pre-commit install. - Save notebooks under the

notebooksfolder - Work on your Jupyter notebooks as usual

- Commit your work. This triggers the pre-commit hook

- If the pre-commit hook fails and introduces new changes, commit those changes too for the hook checks to pass

- Now all checks should pass and you are free to commit and push!

- When you make a PR, collaborators can now provide comments on the paired script

- Feedback can be incorporated either in the notebook or the script since they are synchronized anyways

Here we have assumed you have created and specified .pre-commit-config.yaml and jupytext.toml in your project root.

Further reading ¶

- Jupytext docs

- Jupytext docs on collaborating on notebooks.

- There are some notebook aware tools for diffing and merging

- E.g.

nbdiffandnbmergecommands from nbdime - ReviewNB GitHub app

- Neptune

- E.g.

- https://www.svds.com/jupyter-notebook-best-practices-for-data-science/

- http://timstaley.co.uk/posts/making-git-and-jupyter-notebooks-play-nice/

- https://innerjoin.bit.io/automate-jupyter-notebooks-on-github-9d988ecf96a6

- https://nextjournal.com/schmudde/how-to-version-control-jupyter

Overgeven of Sneuvelen: De Atjeh-oorlog

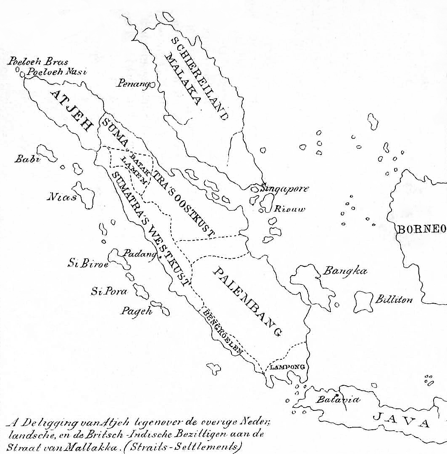

In 1873 valt Nederland de zelfstandige staat Atjeh binnen, gelegen op de noordpunt van Sumatra. De Nederlanders verwachten Atjeh snel te kunnen onderwerpen. Dat blijkt een illusie te zijn. Ze belanden in een oorlog die de langste en bloedigste uit de Nederlandse koloniale geschiedenis zal worden: de Atjeh-oorlog.

Overgeven of sneuvelen is de enige roman in de Nederlandse literatuur die de volledige Atjeh-oorlog beschrijft. Ik vraag de auteur, Bert Wenink, wat hem heeft gedreven vijf jaar aan dit boek te werken. Bestel het boek via zijn website of via boekenbestellen.nl.

EW: Waarom heb je een boek over de Atjeh-oorlog geschreven?

BW: Als ik het over de Atjeh-oorlog heb zie je mensen denken: wat voor een fascinatie heeft een witte man zonder Indische connecties, zonder voorvaderen die in de Oost hebben gevochten, met de Atjeh-oorlog? Mijn antwoord is dan: verwondering, sentiment en inbeelding. Als kind logeerde ik regelmatig bij mijn oma. Ze woonde in Arnhem, in een zijstraat van de Velperweg, dezelfde weg waaraan ook Bronbeek ligt, het museum van de Nederlandse geschiedenis in Indië en rusthuis voor veteranen die in het Koninklijk Nederlands Indisch Leger hadden gediend. Daar zaten ze met goed weer in de tuin: de oude mannetjes in uniform met lange grijze baarden, de ex-KNILers. Met die bijzondere, toen al anachronistische verschijning zijn waarschijnlijk de eerste zaadjes van fascinatie geplant. Later bezocht ik met mijn vader het museum. Ik kan me er weinig van herinneren, maar het moet indruk gemaakt hebben. Het was nog de tijd van het tentoonstellen van exotische trofeeën, van krissen, speren en klewangs aan de muren en buitgemaakte kanonnen in de gangen.

Nog weer later, veel later, ben ik drijvend op die sentimenten zelf terug gegaan, en toen begon de verwondering. Daar in Bronbeek hing in de gang aan de muur een fragment uit de Atjeh-oorlog, geschreven door een zekere Schoemaker, een echte nationalist is me later gebleken toen ik meer van hem las. Iemand die kritiekloos de heldendaden van ‘onze’ jongens ophemelde, maar wel gezegend met een uitstekende pen waarmee hij taferelen levensecht tevoorschijn toverde. Ik wilde steeds meer weten van die exotische, verbijsterende wereld die de Atjeh-oorlog is. Nieuwsgierigheid doet de rest. Waarom wagen jonge mannen zich in de hel van Indië? Wat deed één van Nederlands grootste geleerden uit de 19e eeuw op het slagveld van Atjeh, in een tijd dat de meeste geleerden gewoon achter hun bureau bleven zitten? En waarom vochten inheemse soldaten, in dienst van het KNIL, zo fanatiek voor de Nederlanders, waarom stormden die het eerst tegen de wand van een benteng op met het risico ook als eerste te sneuvelen? Al die vragen, waarbij de antwoorden je van de ene in de andere verbazing doen belanden, waren de drijfveren om steeds meer over de oorlog te lezen en er een roman over te schrijven. Het schrijven was een soort ouderwetse negentiende-eeuwse ontdekkingstocht.

EW: Is het boek historisch verantwoord?

BW: Overgeven of sneuvelen geeft een prima overzicht van de belangrijkste gebeurtenissen uit de Atjeh-oorlog. Bij de historische personen heb ik mijn fantasie zo weinig mogelijk de ruimte gegeven. Anders gezegd: ik laat Van Heutsz, Van Daalen, Sjech Saman di Tiro of Colijn geen verzonnen fratsen uithalen. De echte romanpersonages hebben niet bestaan, maar hun belevenissen spelen zich af in een historische context en zouden echt zo gebeurd kunnen zijn. Ik heb me zeer uitgebreid gedocumenteerd. In mijn boek, en ook op mijn website, heb ik een lijst van ruim honderd geraadpleegde boeken en artikelen opgenomen. Daarnaast heb ik een zeer uitvoerige correspondentie met een militair deskundige en Atjeh-kenner gevoerd.

EW: Je hebt dus veel historisch onderzoek gedaan. Waarom heb je dan toch de vorm van een roman gekozen?

BW: Dat heeft met inbeelding te maken, en natuurlijk met mijn achtergrond; ik ben neerlandicus. Ik zou zelf niet graag meemaken wat de KNIL-soldaten moesten doorstaan, maar meeleven in de vorm van literatuur is veilig genoeg om jezelf onder te dompelen in die ongelofelijke wereld. Ik wilde de hele Atjeh-oorlog beschrijven, en dan met name door de ogen van personages en er ook nog een spannend verhaal met een plot van maken. Een ambitieus plan.

EW: Kunnen we tegenwoordig nog iets leren van de Atjeh-oorlog?

BW: Hoe vecht je tegen een vijand waarbij burgers en strijders nauwelijks te onderscheiden zijn? Waar kan een overhaast ten strijde trekken toe leiden? Vragen die nog steeds actueel zijn. Het Atjese verzet was, net zoals in veel tegenwoordige oorlogen, islamitisch geïnspireerd. De strategische lessen uit de Atjeh-oorlog gelden onverminderd voor hedendaagse oorlogen. En wat mij betreft wierp het geweld uit de Atjeh-oorlog zijn schaduwen vooruit op de koloniale strijd na de Tweede Wereldoorlog. Ik zou bijna zeggen: de Atjeh-oorlog is gruwelijk actueel.

EW: De koloniale geschiedenis van Nederland in Indonesië staat momenteel volop in de aandacht. Recent onderzoek bevestigde bijvoorbeeld dat Nederland na WOII excessief geweld heeft gebruikt tijdens de Indonesische Onafhankelijkheidsoorlog. Jouw roman laat wellicht zien dat dat niet zo’n verrassing is gezien de gruwelijkheden van de Atjeh-oorlog. Wat is jouw morele oordeel over de Atjeh-oorlog?

BW: Verwondering en verbazing waren mijn drijfveren om steeds meer over de oorlog te lezen en er een roman over te schrijven. Pas nadat ik ‘m af had kwamen andere, meer rationele vragen. Over de lessen die we van de Atjeh-oorlog kunnen leren, over de beschaving die de Nederlanders wilden brengen, over de morele aspecten. Mijn blik was – denk ik – niet oordelend, eerder met een zekere compassie voor de militairen, maar zeker niet vergoelijkend. De lezer trekt zelf wel zijn conclusies.

EW: De roman neemt inderdaad geen stelling en biedt de lezer ruimte een eigen oordeel te vellen. Toch is het zo dat de roman vanuit het historische perspectief van Nederland is geschreven. Is dat iets waar je mee hebt geworsteld tijdens het schrijven? In hoeverre is het nodig en mogelijk het perspectief en de beweegredenen van de Atjeeërs te vertolken, of blijven zij toch de stemlozen van de koloniale geschiedenis?

BW: Ik ben me er zeker van bewust dat de roman vanuit Nederlands perspectief is geschreven, al was het maar omdat ik was aangewezen op Nederlandse boeken, waarvan ook nog eens vaak voormalige officieren de auteurs waren. Voor zover mij bekend is er trouwens nauwelijks documentatie van Atjese kant, en mocht die er zijn dan kan ik er niet mee uit de voeten, omdat ik de taal niet machtig ben. De rol om de oorlog vanuit Atjees perspectief te belichten zou ik me trouwens sowieso niet graag toe-eigenen. Ik pretendeer daarom zeker niet de Atjese kant van de oorlog goed te vertolken. Toch hoop ik er wel indirect aan bij te dragen de Atjeeërs een stem te geven. Te zorgen dat de oorlog niet vergeten wordt, lijkt me daarbij een eerste stap.